The second edition of Think DSP is not for sale yet, but if you would like to support this project, you can buy me a coffee.

Saxophone#

Click here to run this notebook on Colab.

import numpy as np

import matplotlib.pyplot as plt

from thinkdsp import decorate

The case of the missing fundamental#

This notebook investigates autocorrelation, pitch perception and a phenomenon called the “missing fundamental”.

I’ll start with a recording of a saxophone.

from thinkdsp import read_wave

wave = read_wave('100475__iluppai__saxophone-weep.wav')

wave.normalize()

wave.make_audio()

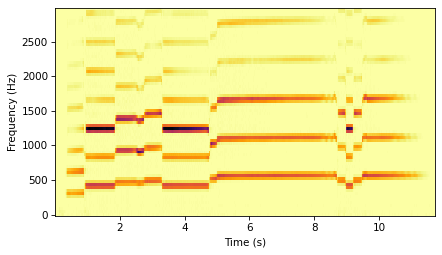

The spectrogram shows the harmonic structure over time.

gram = wave.make_spectrogram(seg_length=1024)

gram.plot(high=3000)

decorate(xlabel='Time (s)', ylabel='Frequency (Hz)')

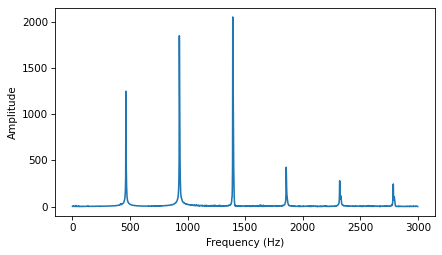

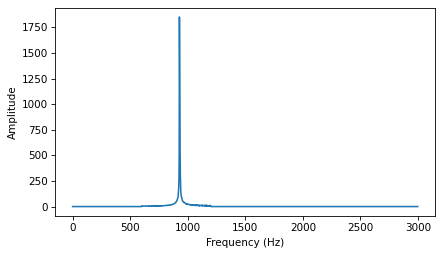

To see the harmonics more clearly, I’ll take a segment near the 2 second mark and compute its spectrum.

start = 2.0

duration = 0.5

segment = wave.segment(start=start, duration=duration)

segment.make_audio()

spectrum = segment.make_spectrum()

spectrum.plot(high=3000)

decorate(xlabel='Frequency (Hz)', ylabel='Amplitude')

The peaks in the spectrum are at 1392, 928, and 464 Hz.

spectrum.peaks()[:10]

[(2054.0622639591206, 1392.0),

(1850.8544230639038, 928.0),

(1684.8468845494765, 1394.0),

(1332.8150506072802, 930.0),

(1249.1774991462646, 464.0),

(1177.6718910227576, 1396.0),

(857.3729096557306, 932.0),

(742.841588837269, 1398.0),

(515.1804113061312, 934.0),

(513.7226300908811, 466.0)]

The pitch we perceive is the fundamental, at 464 Hz, even though it is not the dominant frequency.

For comparison, here’s a triangle wave at 464 Hz.

from thinkdsp import TriangleSignal

TriangleSignal(freq=464).make_wave(duration=0.5).make_audio()

And here’s the segment again.

segment.make_audio()

They have the same perceived pitch.

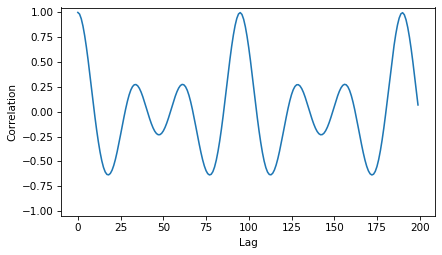

To understand why we perceive the fundamental frequency, even though it is not dominant, it helps to look at the autocorrelation function (ACF).

The following function computes the ACF, selects the second half (which corresponds to positive lags) and normalizes the results:

def autocorr(segment):

corrs = np.correlate(segment.ys, segment.ys, mode='same')

N = len(corrs)

lengths = range(N, N//2, -1)

half = corrs[N//2:].copy()

half /= lengths

half /= half[0]

return half

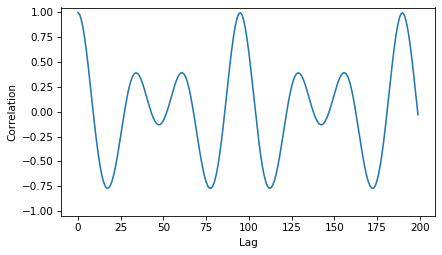

And here’s what the result:

corrs = autocorr(segment)

plt.plot(corrs[:200])

decorate(xlabel='Lag', ylabel='Correlation', ylim=[-1.05, 1.05])

The first major peak is near lag 100.

The following function finds the highest correlation in a given range of lags and returns the corresponding frequency.

def find_frequency(corrs, low, high):

lag = np.array(corrs[low:high]).argmax() + low

print(lag)

period = lag / segment.framerate

frequency = 1 / period

return frequency

The highest peak is at a lag 95, which corresponds to frequency 464 Hz.

find_frequency(corrs, 80, 100)

95

464.2105263157895

At least in this example, the pitch we perceive corresponds to the highest peak in the autocorrelation function (ACF) rather than the highest component of the spectrum.

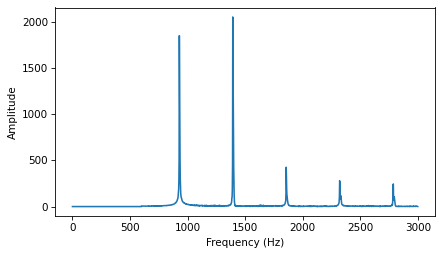

Surprisingly, the perceived pitch doesn’t change if we remove the fundamental completely. Here’s what the spectrum looks like if we use a high-pass filter to clobber the fundamental.

spectrum2 = segment.make_spectrum()

spectrum2.high_pass(600)

spectrum2.plot(high=3000)

decorate(xlabel='Frequency (Hz)', ylabel='Amplitude')

And here’s what it sounds like.

segment2 = spectrum2.make_wave()

segment2.make_audio()

The perceived pitch is still 464 Hz, even though there is no power at that frequency. This phenomenon is called the “missing fundamental”: https://en.wikipedia.org/wiki/Missing_fundamental

To understand why we hear a frequency that’s not in the signal, it helps to look at the autocorrelation function (ACF).

corrs = autocorr(segment2)

plt.plot(corrs[:200])

decorate(xlabel='Lag', ylabel='Correlation', ylim=[-1.05, 1.05])

The third peak, which corresponds to 464 Hz, is still the highest:

find_frequency(corrs, 80, 100)

95

464.2105263157895

But there are two other peaks corresponding to 1297 Hz and 722 Hz.

find_frequency(corrs, 20, 50)

34

1297.0588235294117

find_frequency(corrs, 50, 80)

61

722.9508196721312

So why don’t we perceive either of those pitches, instead of 464 Hz? The reason is that the higher components that are present in the signal are harmonics of 464 Hz and they are not harmonics of 722 or 1297 Hz.

Our ear interprets the high harmonics as evidence that the “right” fundamental is at 464 Hz.

If we get rid of the high harmonics, the effect goes away. Here’s a spectrum with harmonics above 1200 Hz removed.

spectrum4 = segment.make_spectrum()

spectrum4.high_pass(600)

spectrum4.low_pass(1200)

spectrum4.plot(high=3000)

decorate(xlabel='Frequency (Hz)', ylabel='Amplitude')

Now the perceived pitch is 928 Hz.

segment4 = spectrum4.make_wave()

segment4.make_audio()

TriangleSignal(freq=928).make_wave(duration=0.5).make_audio()

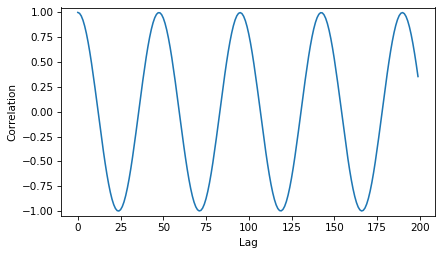

And if we look at the autocorrelation function, we find the highest peak at lag=47, which corresponds to 938 Hz.

corrs = autocorr(segment4)

plt.plot(corrs[:200])

decorate(xlabel='Lag', ylabel='Correlation', ylim=[-1.05, 1.05])

find_frequency(corrs, 30, 50)

47

938.2978723404256

For convenience, here are all the versions together.

A triangle signal at 464 Hz:

TriangleSignal(freq=464).make_wave(duration=0.5).make_audio()

The original segment:

segment.make_audio()

After removing the fundamental:

segment2.make_audio()

After removing the harmonics above the dominant frequency, too.

segment4.make_audio()

And a pure sinusoid:

from thinkdsp import SinSignal

SinSignal(freq=928).make_wave(duration=0.5).make_audio()

In summary, these experiments suggest that pitch perception is not based entirely on spectral analysis, but is also informed by something like autocorrelation.

Think DSP: Digital Signal Processing in Python, 2nd Edition

Copyright 2024 Allen B. Downey

License: Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International