Resampling and Logistic Regression#

Click here to run this notebook on Colab

Different ways of computing sampling distributions – and the statistics derived from them, like standard errors and confidence intervals – yield different results. None of them are right or wrong; rather, they are based on different modeling assumptions. In practice, the differences are often small compared to other sources of error, so we are free to use whichever is convenient.

This article is prompted by a recent question on Reddit:

I am trying to do parametric bootstrap for logistic regression but I don’t know what the random error terms should be. I know how to do it with linear regression since the error terms follow a normal distribution. Really appreciate any pointers to resources about this topic.

One of the responses recommends this paper: “An Application of Bootstrapping in Logistic Regression Model”, by Isaac Akpor Adjei and Rezaul Karim.

The paper suggests two ways to compute the sampling distribution of the parameters of a logistic regression by bootstrap sampling. They characterize one as parametric and the other as non-parametric, and advise:

The non-parametric bootstrap [relies] on weaker assumptions (or no assumptions at all) about the underlying distribution of the population. Statistical practitioners should use non-parametric procedures only in so far as the assumptions about the underlying distribution are seriously doubtful in their validity. … However, when assumptions are not violated, non-parametric procedures will usually have greater variance (in point estimation), less power (in hypothesis testing), wider intervals (in confidence interval estimation), lower probability of correct selection (in ranking and selection) and higher risk (in decision theory) when compared to a corresponding parametric procedure (Efron and Tibshirani, 1994 [1] ).

The premise of this advice is that the parametric and non-parametric methods are answering the same question in different ways, and that one might be preferred over the other because the results are preferable in one way or another. It is also based on the assumption that the sampling distribution of the parameters – and the statistics derived from it – are uniquely and objectively defined.

I disagree with these premises. The parametric and non-parametric methods they present are based on different modeling decisions, so they compute answers to different questions. And the models they are based on are only two of many possible models.

To explain what I mean, I will implement the methods they propose and explain the assumptions each is based on. Then I will propose a third method that is a hybrid of the two. I’ll show that all three methods yield different results, and suggest criteria for when one might be preferred over the others.

The Data#

As an example, I’ll use data from the General Social Survey (GSS). I’ll download an HDF file from the Elements of Data Science repository, which contains a subset of the GSS data that has been resampled to correct for stratified sampling.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

np.random.seed(0)

from os.path import basename, exists

def download(url):

filename = basename(url)

if not exists(filename):

from urllib.request import urlretrieve

local, _ = urlretrieve(url, filename)

print('Downloaded ' + local)

download('https://github.com/AllenDowney/' +

'ElementsOfDataScience/raw/master/data/gss_eda.hdf')

gss = pd.read_hdf('gss_eda.hdf', 'gss')

gss.shape

(64814, 8)

It includes 64,814 respondents and 8 variables for each respondent.

gss.head()

| YEAR | ID_ | AGE | EDUC | SEX | GUNLAW | GRASS | REALINC | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1972 | 1 | 23.0 | 16.0 | 2 | 1.0 | NaN | 18951.0 |

| 1 | 1972 | 2 | 70.0 | 10.0 | 1 | 1.0 | NaN | 24366.0 |

| 2 | 1972 | 3 | 48.0 | 12.0 | 2 | 1.0 | NaN | 24366.0 |

| 3 | 1972 | 4 | 27.0 | 17.0 | 2 | 1.0 | NaN | 30458.0 |

| 4 | 1972 | 5 | 61.0 | 12.0 | 2 | 1.0 | NaN | 50763.0 |

To demonstrate logistic regression, I’ll use on one of the questions in the General Social Survey, which asks “Do you think the use of marijuana should be made legal or not?”

The responses are in a column called GRASS; here are the values.

gss['GRASS'].value_counts()

2.0 25662

1.0 11884

Name: GRASS, dtype: int64

I’ll use StatsModels, which provides a function that does logistic regression.

First we have to recode the dependent variable so 1 means “yes” and 0 means “no”. We can do that by replacing 2 with 0.

gss['GRASS'].replace(2, 0, inplace=True)

To model quadratic relationships, I’ll add columns that contain the values of AGE and EDUC squared.

gss['AGE2'] = gss['AGE']**2

gss['EDUC2'] = gss['EDUC']**2

And I’ll drop the rows that have missing values for the variables we’ll need.

data = gss.dropna(subset=['AGE', 'EDUC', 'SEX', 'GUNLAW', 'GRASS'])

Logistic Regression#

Here are the results of logistic regression with these variables.

import statsmodels.formula.api as smf

formula = 'GRASS ~ AGE + AGE2 + EDUC + EDUC2 + C(SEX)'

result_hat = smf.logit(formula, data=data).fit()

result_hat.summary()

Optimization terminated successfully.

Current function value: 0.593464

Iterations 6

| Dep. Variable: | GRASS | No. Observations: | 20475 |

|---|---|---|---|

| Model: | Logit | Df Residuals: | 20469 |

| Method: | MLE | Df Model: | 5 |

| Date: | Tue, 17 Jan 2023 | Pseudo R-squ.: | 0.05003 |

| Time: | 08:58:57 | Log-Likelihood: | -12151. |

| converged: | True | LL-Null: | -12791. |

| Covariance Type: | nonrobust | LLR p-value: | 1.555e-274 |

| coef | std err | z | P>|z| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| Intercept | -1.6788 | 0.240 | -6.988 | 0.000 | -2.150 | -1.208 |

| C(SEX)[T.2] | -0.3849 | 0.031 | -12.394 | 0.000 | -0.446 | -0.324 |

| AGE | -0.0278 | 0.005 | -5.399 | 0.000 | -0.038 | -0.018 |

| AGE2 | 0.0001 | 5.28e-05 | 2.190 | 0.029 | 1.21e-05 | 0.000 |

| EDUC | 0.2000 | 0.031 | 6.412 | 0.000 | 0.139 | 0.261 |

| EDUC2 | -0.0029 | 0.001 | -2.450 | 0.014 | -0.005 | -0.001 |

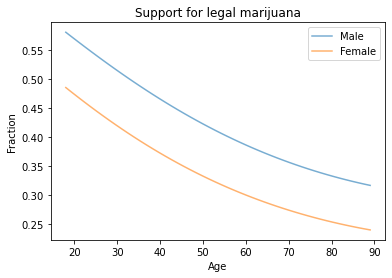

To get a sense of what the results look like, we can plot the predicted probability of saying “yes” as a function of age, for male and female respondents.

df = pd.DataFrame()

df['AGE'] = np.linspace(18, 89)

df['EDUC'] = 16

df['AGE2'] = df['AGE']**2

df['EDUC2'] = df['EDUC']**2

df['SEX'] = 1

pred1 = result_hat.predict(df)

pred1.index = df['AGE']

df['SEX'] = 2

pred2 = result_hat.predict(df)

pred2.index = df['AGE']

pred1.plot(label='Male', alpha=0.6)

pred2.plot(label='Female', alpha=0.6)

plt.xlabel('Age')

plt.ylabel('Fraction')

plt.title('Support for legal marijuana')

plt.legend();

Men are more likely to support legalization than women, and younger people more likely than older people.

Boostrap sampling#

Now let’s implement the method characterized as non-parametric, which is based on bootstrap resampling.

The following function samples the rows of data with replacement and runs the regression model on the resampled data.

options = dict(disp=False, start_params=result_hat.params)

def bootstrap(i):

bootstrapped = data.sample(n=len(data), replace=True)

results = smf.logit(formula, data=bootstrapped).fit(**options)

return results.params

The options sent to fit make it run faster, but don’t affect the results by much.

Each time we run this process, the result represents a single draw from the sampling distribution of the parameters.

%timeit bootstrap(0)

82.2 ms ± 1.89 ms per loop (mean ± std. dev. of 7 runs, 10 loops each)

By running it many times, we generate a sample from the sampling distribution. pqdm runs the sampling process in multiple threads.

try:

from pqdm.threads import pqdm

except ImportError:

!pip install pqdm

from pqdm.threads import pqdm

estimates = pqdm(range(101), bootstrap, n_jobs=4)

sampling_dist = pd.DataFrame(estimates)

sampling_dist.head()

| Intercept | C(SEX)[T.2] | AGE | AGE2 | EDUC | EDUC2 | |

|---|---|---|---|---|---|---|

| 0 | -1.747448 | -0.413202 | -0.027473 | 0.000114 | 0.213299 | -0.003302 |

| 1 | -2.055303 | -0.411384 | -0.029943 | 0.000129 | 0.276153 | -0.005563 |

| 2 | -2.050773 | -0.414960 | -0.023190 | 0.000094 | 0.242778 | -0.004530 |

| 3 | -2.031659 | -0.392391 | -0.029538 | 0.000147 | 0.251942 | -0.004616 |

| 4 | -1.847295 | -0.363829 | -0.031532 | 0.000156 | 0.238870 | -0.004339 |

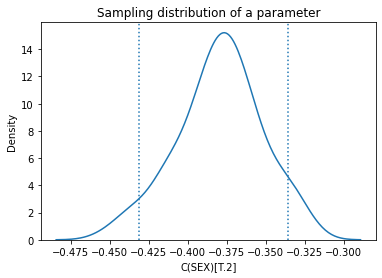

Here’s what the sampling distribution looks like for one of the parameters.

ci90 = sampling_dist['C(SEX)[T.2]'].quantile([0.05, 0.95])

sns.kdeplot(sampling_dist['C(SEX)[T.2]'])

[plt.axvline(x, ls=':') for x in ci90]

plt.title('Sampling distribution of a parameter');

The mean of the sampling distribution should be close to the parameters we estimated with the original dataset, and it is.

pd.DataFrame({"Sampling mean": sampling_dist.mean(),

"Estimates": result_hat.params})

| Sampling mean | Estimates | |

|---|---|---|

| Intercept | -1.681880 | -1.678838 |

| C(SEX)[T.2] | -0.379621 | -0.384919 |

| AGE | -0.027804 | -0.027825 |

| AGE2 | 0.000115 | 0.000116 |

| EDUC | 0.201137 | 0.200002 |

| EDUC2 | -0.002912 | -0.002855 |

The standard deviations of the sampling distributions should be close to the standard errors computed by StatsModels, and they are. Most of them are close enough that the difference probably doesn’t matter in practice.

def standard_errors(sampling_dist, result_hat):

df = pd.DataFrame({"Sampling std": sampling_dist.std(),

"Standard error": result_hat.bse})

num, den = df.values.T

df['Percent diff'] = (num / den - 1) * 100

return df

standard_errors(sampling_dist, result_hat)

| Sampling std | Standard error | Percent diff | |

|---|---|---|---|

| Intercept | 0.248814 | 0.240243 | 3.567779 |

| C(SEX)[T.2] | 0.027137 | 0.031057 | -12.622418 |

| AGE | 0.004944 | 0.005153 | -4.066840 |

| AGE2 | 0.000051 | 0.000053 | -4.198399 |

| EDUC | 0.031756 | 0.031193 | 1.804886 |

| EDUC2 | 0.001197 | 0.001166 | 2.687507 |

Of course, there is nothing magic about the standard errors computed by StatsModels; they are approximations based on a model of the sampling process, just like the results from resampling.

The Resampling Framework#

The purpose of the sampling distribution is to quantify the variability in an estimate due to random sampling. It is the answer to the question, “If we ran this sampling process many times, how much would we expect the results to vary?”

To answer that question, we need a model of the sampling process. We use the model to simulate the sampling process and generate counterfactual datasets that represent other samples the process could have generated.

The following figure represents this framework.

In bootstrap resampling, we treat the rows of the original dataset as if they are the population and draw a random sample from it. We use this simulated data to compute the sample statistic; in this example, it’s the parameters of the logistic regression model. We collect the results to form a sample from the sampling distribution, which we can use to compute confidence intervals and standard errors.

This way of simulating the sampling process asks, in effect, what would have happened if we had surveyed different people. But that’s not the only possible model of the sampling process. An alternative is to ask what would happen if we surveyed the same people, but they gave different answers. That’s the model that underlies the parametric method.

Parametric bootstrap#

So, how do we simulate a sampling process where we survey the same people, but they give different answers? One way is to assume, for the purposes of the simulation, that the parameters we estimated from the original data are correct. If so, we can use the regression model to compute the predicted probability for each respondent, like this:

pi_hat = result_hat.predict(data)

pi_hat.describe()

count 20475.000000

mean 0.317167

std 0.113654

min 0.025989

25% 0.234792

50% 0.317927

75% 0.400791

max 0.630366

dtype: float64

Now we can use these probabilities to generate a biased coin toss for each respondent.

from scipy.stats import bernoulli

simulated = bernoulli.rvs(pi_hat.values)

simulated.mean()

0.3174114774114774

Then we can run the regression model with these simulated values as the dependent variable. The following function shows how.

def bootstrap2(i):

flipped = data.assign(GRASS=bernoulli.rvs(pi_hat.values))

results = smf.logit(formula, data=flipped).fit(**options)

return results.params

Again, the result from a single simulation is a random value from the sampling distribution.

bootstrap2(0)

Intercept -1.805556

C(SEX)[T.2] -0.352934

AGE -0.023101

AGE2 0.000058

EDUC 0.196758

EDUC2 -0.002599

dtype: float64

If we run it many times, we get a sample from the sampling distribution.

from pqdm.threads import pqdm

estimates = pqdm(range(101), bootstrap2, n_jobs=4)

sampling_dist2 = pd.DataFrame(estimates)

sampling_dist2.head()

| Intercept | C(SEX)[T.2] | AGE | AGE2 | EDUC | EDUC2 | |

|---|---|---|---|---|---|---|

| 0 | -1.804015 | -0.384881 | -0.014708 | -0.000017 | 0.180796 | -0.002302 |

| 1 | -1.432664 | -0.422171 | -0.023207 | 0.000044 | 0.166255 | -0.001940 |

| 2 | -1.415658 | -0.406837 | -0.033815 | 0.000168 | 0.180158 | -0.001914 |

| 3 | -1.840253 | -0.394937 | -0.028435 | 0.000126 | 0.221295 | -0.003461 |

| 4 | -1.642430 | -0.375719 | -0.034946 | 0.000177 | 0.216985 | -0.003430 |

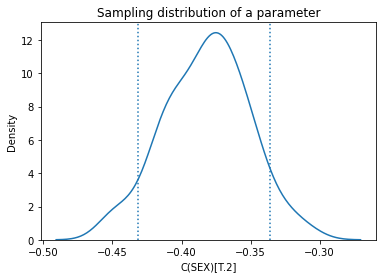

Here’s the sampling distribution for a single parameter.

ci90 = sampling_dist2['C(SEX)[T.2]'].quantile([0.05, 0.95])

sns.kdeplot(sampling_dist2['C(SEX)[T.2]'])

[plt.axvline(x, ls=':') for x in ci90]

plt.title('Sampling distribution of a parameter');

Again, the standard deviations should be close to the standard errors, and they are.

standard_errors(sampling_dist2, result_hat)

| Sampling std | Standard error | Percent diff | |

|---|---|---|---|

| Intercept | 0.199178 | 0.240243 | -17.092962 |

| C(SEX)[T.2] | 0.030494 | 0.031057 | -1.812430 |

| AGE | 0.005085 | 0.005153 | -1.327560 |

| AGE2 | 0.000054 | 0.000053 | 2.118557 |

| EDUC | 0.024899 | 0.031193 | -20.178173 |

| EDUC2 | 0.000935 | 0.001166 | -19.819110 |

So we have two models of the sampling process that yield different sampling distributions. But these are not the only models.

If the first method asks, “What if we surveyed different people?” and the second asks “What if we surveyed the same people and they gave different answers?”, let’s consider a third method that asks “¿Por qué no los dos?”.

The Hybrid Model#

The following function uses bootstrap sampling to simulate surveying different people; then it uses the parametric method to simulate their responses.

def bootstrap3(i):

bootstrapped = data.sample(n=len(data), replace=True)

pi_hat = result_hat.predict(bootstrapped)

flipped = bootstrapped.assign(GRASS=bernoulli.rvs(pi_hat.values))

results = smf.logit(formula, data=flipped).fit(**options)

return results.params

bootstrap3(0)

Intercept -1.655314

C(SEX)[T.2] -0.386624

AGE -0.024265

AGE2 0.000083

EDUC 0.182822

EDUC2 -0.002334

dtype: float64

from pqdm.threads import pqdm

estimates = pqdm(range(101), bootstrap3, n_jobs=4)

sampling_dist3 = pd.DataFrame(estimates)

sampling_dist3.head()

| Intercept | C(SEX)[T.2] | AGE | AGE2 | EDUC | EDUC2 | |

|---|---|---|---|---|---|---|

| 0 | -2.019658 | -0.385612 | -0.025974 | 0.000108 | 0.249717 | -0.005012 |

| 1 | -1.648528 | -0.351063 | -0.025192 | 0.000100 | 0.182666 | -0.002312 |

| 2 | -1.780499 | -0.445770 | -0.032862 | 0.000173 | 0.242922 | -0.004569 |

| 3 | -1.534347 | -0.464670 | -0.029799 | 0.000125 | 0.189228 | -0.002218 |

| 4 | -1.336757 | -0.404636 | -0.030217 | 0.000137 | 0.160754 | -0.001453 |

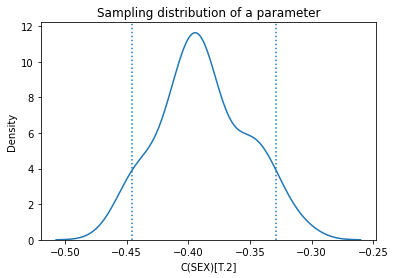

Here’s the sampling distribution for one of the parameters.

ci90 = sampling_dist3['C(SEX)[T.2]'].quantile([0.05, 0.95])

sns.kdeplot(sampling_dist3['C(SEX)[T.2]'])

[plt.axvline(x, ls=':') for x in ci90]

plt.title('Sampling distribution of a parameter');

Again, the standard errors are comparable to the ones computed by StatsModels.

standard_errors(sampling_dist3, result_hat)

| Sampling std | Standard error | Percent diff | |

|---|---|---|---|

| Intercept | 0.236901 | 0.240243 | -1.390859 |

| C(SEX)[T.2] | 0.035600 | 0.031057 | 14.629106 |

| AGE | 0.005769 | 0.005153 | 11.939358 |

| AGE2 | 0.000061 | 0.000053 | 15.337773 |

| EDUC | 0.031469 | 0.031193 | 0.884552 |

| EDUC2 | 0.001173 | 0.001166 | 0.613167 |

Now we have four ways to compute sampling distributions, confidence intervals, and standard errors – and they yield different results. So you might wonder…

Which One Is Right?#

None of them are right. They are based on different models of the sampling process, so they quantify different sources of uncertainty.

In some cases we might find that different methods converge on the same results, asymptotically, under certain assumptions. But that doesn’t really matter, because with finite data sets, the results are not generally the same. So the important question is whether the differences are big enough to matter in practice.

In this example, it is easy to implement multiple models and compare the results. If they were substantially different, we would need to think more carefully about the modeling assumptions they are based on and choose the one we think is the best description of the data-generating process.

But in this example, the differences are small enough that they probably don’t matter in practice. So we are free to choose whichever is easiest to implement, or fastest to compute, or convenient in some other way.

It is a common error to presume that the result of an analytic method is uniquely correct, and that results from computational methods like resampling are approximations to it. Analytic methods are often fast to compute, but they are always based on modeling assumptions and usually based on approximations, so they are no more correct than computational methods.

Copyright 2023 Allen Downey

Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0)